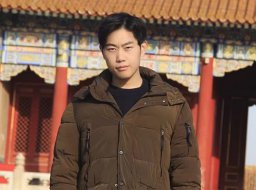

About me

I am Jun Rao (饶隽), a final-year Ph.D. candidate at the School of Computer Science and Technology, Harbin Institute of Technology (Shenzhen), co-advised by Min Zhang and Xuebo Liu. I published over 20 papers at top conferences and international journals (ACM/IEEE Trans), including ACL, EMNLP, SIGIR, MM, CIKM, TALLIP, IPM, ToMM, and TMM. I have a strong preference for methods that are simple, intuitve and fun. My long-term research goal is to build socially intelligent embodied agents with the ability to perceive and engage in multimodal human communication. As steps towards this goal, my research focuses on

1) the fundamentals of multimodal learning, specifically the representation, translation, fusion, and alignment of heterogeneous data sources,

2) human-centered language, vision, and their applications,

3) the real-world deployment of efficiency involves both the amount of computation and data required for (pre-, post-)training and using LLMs.

Publications

Exploring and Enhancing the Transfer of Distribution in Knowledge Distillation for Autoregressive Language Models

Jun Rao, Xuebo Liu, Zepeng Lin, Liang Ding, Jing Li, and Min Zhang

Knowledge-Based Systems 2026

SeaPO: Strategic Error Amplification for Robust Preference Optimization of Large Language Models

Jun Rao, Yunjie Liao, Xuebo Liu, Zepeng Lin, Lian Lian, Dong Jin, Shengjun Cheng, Jun Yu, and Min Zhang

EMNLP 2025 (Findings)

APT: Improving Specialist LLM Performance with Weakness Case Acquisition and Iterative Preference Training

Jun Rao, Zepeng Lin, Xuebo Liu, Xiaopeng Ke, Lian Lian, Dong Jin, Shengjun Cheng, Jun Yu, and Min Zhang

ACL 2025 (Findings)

CommonIT: Commonality-Aware Instruction Tuning for Large Language Models via Data Partitions

Jun Rao, Xuebo Liu, Lian Lian, Cheng Shengjun, Yunjie Liao, and Min Zhang

EMNLP 2024

Parameter-efficient and student-friendly knowledge distillation

Jun Rao, Xv Meng, Liang Ding, Shuhan Qi, Xuebo Liu, Min Zhang, Dacheng Tao

TMM 2023

What is the limitation of multimodal llms? a deeper look into multimodal llms through prompt probing

Shuhan Qi, Zhengying Cao, Jun Rao*, Lei Wang, Jing Xiao, Xuan Wang

IPM 2023, Corresponding Author

Dynamic contrastive distillation for image-text retrieval

Jun Rao, Liang Ding, Shuhan Qi, Meng Fang, Yang Liu, Li Shen, Dacheng Tao

TMM 2023

Where Does the Performance Improvement Come From? -A Reproducibility Concern about Image-Text Retrieval

Jun Rao, Fei Wang, Liang Ding, Shuhan Qi, Yibing Zhan, Weifeng Liu, Dacheng Tao

SIGIR 2022

Student can also be a good teacher: Extracting knowledge from vision-and-language model for cross-modal retrieval

Jun Rao, Tao Qian, Shuhan Qi, Yulin Wu, Qing Liao, Xuan Wang

CIKM 2021

Topics of Interest

- Survey Project

- Awesome-LLMs-Data-AI (A Survey on Data-Centric LLMs: From Creation to Application) [https://github.com/raojay7/Awesome-LLMs-Data-AI/tree/main]

- Training Strategy

- CommonIT [EMNLP 24, CCF B]

- Curriculum consistency learning for conditional sentence generation. EMNLP 2024.

- Data Synthesis

- APT [ACL 25, CCF A]

- SeaPO [EMNLP 25, CCF B]

- AQuilt: Weaving Logic and Self-Inspection into Low-Cost, High-Relevance Data Synthesis for Specialist LLMs. EMNLP 2025.

- Reasoning

- REA-RL: Reflection-Aware Online Reinforcement Learning for Efficient Large Reasoning Models. 2025.

- Dynamic Sampling that Adapts: Iterative DPO for Self-Aware Mathematical Reasoning. 2025.

- Knowledge Distillation

- PESF-KD [TMM 23, JCR Q1]

- DCD [TMM 23, JCR Q1]

- OKD [KBS 26, JCR Q1]

- Evaluation

- Where Does the Performance Improvement Come From? -A Reproducibility Concern about Image-Text Retrieval. [SIGIR 22, CCF A]

- What is the limitation of multimodal llms? a deeper look into multimodal llms through prompt probing. [IPM 23, JCR Q1]

- Can Linguistic Knowledge Improve Multimodal Alignment in Vision-Language Pretraining? [ToMM 23, JCR Q1]

- 3AM: An Ambiguity-Aware Multi-Modal Machine Translation Dataset. [COLING, CCF B]

- MDIT-Bench: Evaluating the Dual-Implicit Toxicity in Large Multimodal Models. [ACL 25, CCF A]

Intership Experience

- 2025.01-2025.12: NLP Research Intern

- Huawei Noah’s Ark Lab, China

- Main Work: Data of LLMs and Math Reasoning.

- Supervisor: Dr. Xiaojun Meng

- Academic Outputs: Submitted*3

- 2024.01-2024.12: NLP Research Intern

- Huawei Cloud Computing Technologies Co., Ltd., China

- Main Work: Specialist LLMs.

- Supervisor: Dr. Lian Lian

- Academic Outputs: ACL 2025, EMNLP 2024, EMNLP 2025

- 2021.11-2022.04: NLP Research Intern

- JD EXPLORE ACADEMY, China

- Main Work: Multimodal Models and Image-Text Retrieval.

- Supervisor: Dr. Liang Ding

- Academic Outputs: SIGIR 2022, TMM*2

Personal information

I like playing table tennis. I have won the eighth place in the team of Guangdong Provincial Games for college students, the fourth place in the mixed doubles of Shenzhen District Competition, the fifth place in the Fifth Shenzhen Cup Amateur Open Competition, the 16th place in the singles and team of Shenzhen Municipal Games for the mass group, and the second place in the team of Huawei Table Tennis 2024.